Three papers co-authored by Dartmouth computer science researchers have been selected for spotlights at the 2023 Conference on Neural Information Processing Systems, regarded as one the most prestigious conferences in machine learning and artificial intelligence research.

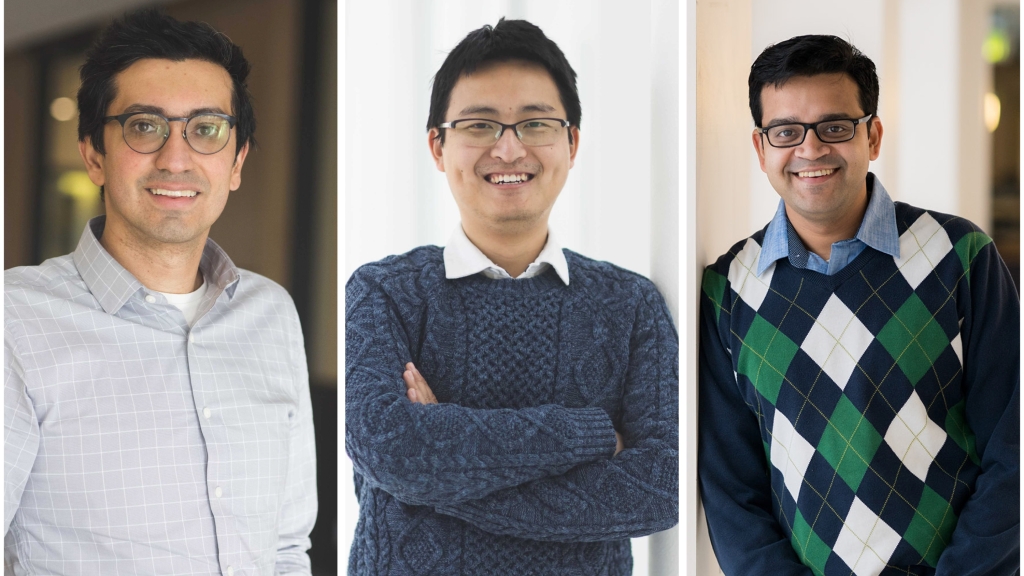

Congratulations to all the computer science faculty and graduate students who had their research accepted to the conference. A special shoutout to assitant professors Soroush Vosoughi and Yaoqing Yang, associate professor Deeparnab Chakrabarty, and their co-authors, whose papers, summarized below, were selected for spotlight presentations at the event, with an acceptance rate of 3%.

Bootstrapping Vision-Language Learning with Decoupled Language Pre-training

[Yiren Jian · Chongyang Gao · Soroush Vosoughi]

The paper advances vision-language models (VML). VMLs are a phase of transition of large language models (LLMs), incorporating visual information into them. In their paper, the researchers present a new method to enhance how computer models combine visual and language data.

Currently, visual features are used as prompts to guide language models, with a focus on determining the most relevant visual features for corresponding text. The researchers reverse this process by concentrating on the language component, specifically identifying the optimal prompts to align with visual features.

They introduce the Prompt-Transformer (P-Former), a model that predicts these ideal prompts, which is trained exclusively on linguistic data, bypassing the need for image-text pairings. Their Prompt-Transformer model predicts ideal language prompts for images and requires no paired image-text data. This approach improves the efficiency of existing models and proves versatile across different data types, including videos.

Temperature Balancing, Layer-wise Weight Analysis, and Neural Network Training

[Yefan Zhou · Yaoqing Yang et al.]

Most deep neural networks have complex multilayer structures, often seen as a barrier to understanding them transparently. The research outlined in this paper reveals an interesting insight: different layers of a neural network are usually not uniformly well-trained.

By identifying and addressing underperforming layers, the researchers enhance the overall network quality. Thus, this paper introduces a "model diagnostic" tool for improving training. The authors name the technique "temperature balancing," and demonstrate its effectiveness across various benchmarks and network architectures.

For instance, they show that temperature balancing improves test accuracy on image classification datasets such as CIFAR100, CIFAR100, Tiny ImageNet, and SVHN; it improves object detection on PASCAL VOC 2007; it improves language modeling on Language Modelling on Penn Treebank; it also outperforms more than five state-of-the-art learning rate schedulers in improving the test-time performance. All of these are rooted in our ability to dissect and diagnose network imbalances. Their approach is principled and is inspired by classical random matrix theory results.

Parallel Submodular Function Minimization

[Deeparnab Chakrabarty et al.]

Can an algorithm divide individuals in a social network into two groups while minimizing the number of friends split apart in an efficient way? The total number of such groupings is exponentially large (if N is the size of the population, then there are roughly 2^N ways to divide them), and so going over all of them is infeasible if N is moderately large.

It turns out that the above problem is a very special case of a fundamental optimization problem called submodular function minimization (SFM). It has been known since the 80's that this general problem, quite remarkably, can indeed be solved efficiently (the state-of-the-art algorithm makes roughly N^3 queries to the function).

Unfortunately, all known efficient algorithms are highly sequential in that they proceed in at least N-rounds where the queries made in a certain round depends on all the answers made in previous rounds. The question motivating the researchers was: can the algorithms be parallelized to run in fewer rounds without sacrificing too much on efficiency? In a previous paper, they show that the answer is negative. In general, there exist submodular functions which need roughly N-rounds to efficiently minimize.

In the upcoming NeurIPS paper, the researchers set out to find classes of functions where can actually be parallelized. In particular, they showed that if one focused on the question of approximately minimizing the function, then one could obtain a non-trivial algorithm which runs in roughly cuberoot of N-rounds. This means that if the range of the submodular function is small (that is, the output of the function takes integer values from -M to +M, for some small number M), the SFM can be solved in roughly cuberoot of N rounds.