Researchers from the Visual Computing Lab will present three papers at the 2025 SIGGRAPH Asia conference in Hong Kong this week.

Two papers led by Wojciech Jarosz, associate professor of computer science focus on rendering techniques. The third, describing research led by Adithya Pediredla, assistant professor of computer science, presents a novel underwater communication technique that uses sound to modulate light.

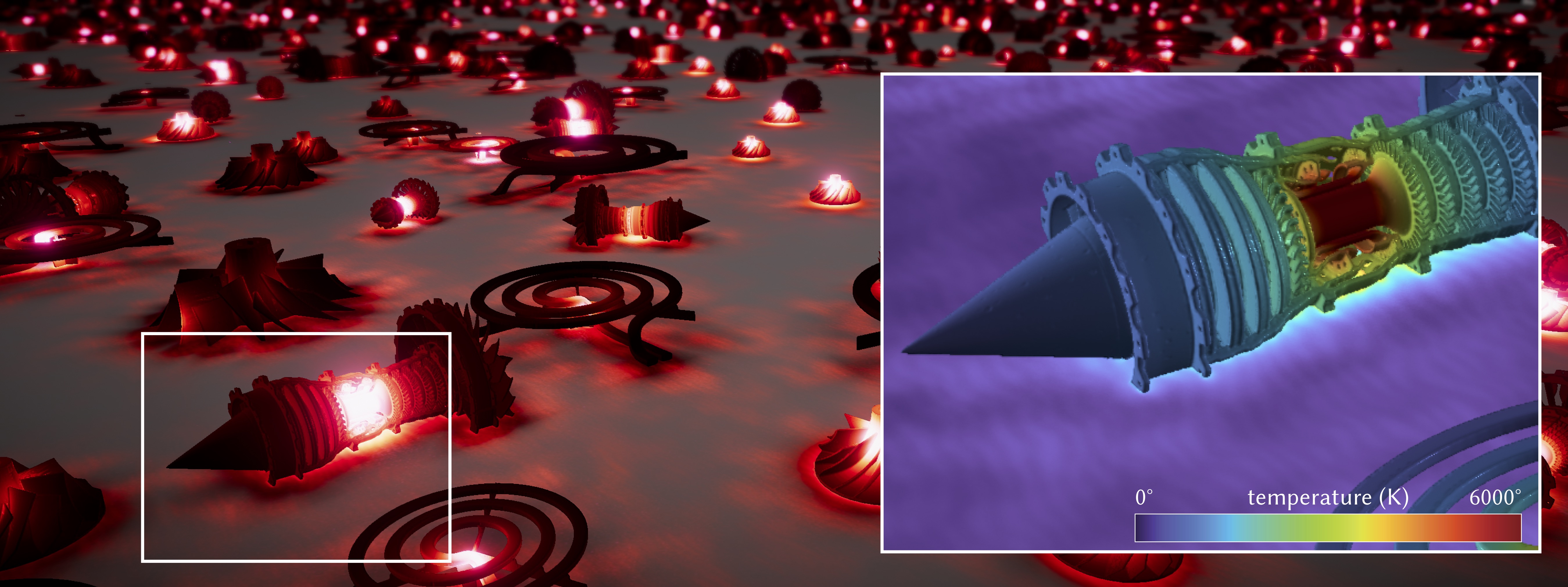

The first paper authored by Jarosz, computer science PhD student Kehan Xu, and collaborators from NVidia tackles a long-standing challenge in computer graphics: how to seamlessly represent objects that behave partly like solid surfaces and partly like volumes.

"Imagine a scene that has a dune of sand; that's a solid surface. Now imagine there's wind blowing, with some of that sand getting stirred up into the air, looking more like a cloud," says Jarosz. Traditional rendering systems typically force designers to render them either as solids or volumes, making it difficult to depict materials in the middle.

The new method offers a unified representation for both, and its performance leap is striking—9,000 times faster than the researchers' approach from last year. "We're using building blocks that graphics people are already familiar with, like the addition of noisy textures to images to turn a solid surface into something that looks like a cloud," says Jarosz.

Beyond creating scenes using computer graphics, the framework also shows promise for scientific applications. Collaborations are already planned for remote sensing tasks such as modeling glaciers where complex geometry and measurements taken across scales—from satellite images to microCT scans—often need to be reconciled.

The second paper focuses on solving differential equations that describe how heat moves through materials. Authors include Jarosz, PhD student Zihong Zhou, and collaborators from Nvidia.

Borrowing ideas from algorithms originally developed for feature-film rendering, the method dramatically reduces noise in simulations by reusing information from one point to inform its neighbors. This coherence-based strategy allows researchers to compute heat flow within objects and spaces more smoothly and in far less time than their previous work.

For engineers and architects, these advances could translate into better tools for visualizing temperature variations during the design process, supporting energy-efficient buildings and preventing overheating-related hazards.

Researchers from Pediredla's Rendering and Imaging Science lab are developing a promising new way for devices to talk to each other underwater—something that has always been surprisingly difficult.

Because water absorbs radio waves, tools like Wi-Fi and GPS barely work below the surface. That leaves sound as the main option, but it’s slow, allowing only simple, low-bandwidth communication.

The new prototype changes that dramatically, reaching a million bits per second, about a thousand times faster than today’s state-of-the-art systems. Instead of sending sound directly as information, the researchers use sound waves to alter the refractive index of water. In effect, the water becomes a rapidly tunable lens that can steer light efficiently and with very little power.

"We can even stream videos at the speeds that we are able to achieve," says Pediredla, who is excited about the possibilities the technique could open up. "Swarms of underwater drones might be able to team up to construct structures underwater or explore natural waters if they are able to communicate reliably with each other," he says.

Masters' student Atul Agarwal, Guarini '25 and PhD candidate Dhawal Sirikonda are lead authors on the SIGGRAPH paper. Collaborators include Associate Professor Alberto Quattrini Li, an expert on autonomous mobile robotics and Xia Zhou, associate professor at Columbia University, whose research centers on mobile computing.

The paper details early tests on an optical table in the lab show that the technique is robust even in cloudy or choppy waters. Efforts to bring the technique out of the lab and take the plunge into deeper waters are underway.