- Undergraduate

- Graduate

- Research

- News & Events

- People

- Inclusivity

- Jobs

Back to Top Nav

Back to Top Nav

Back to Top Nav

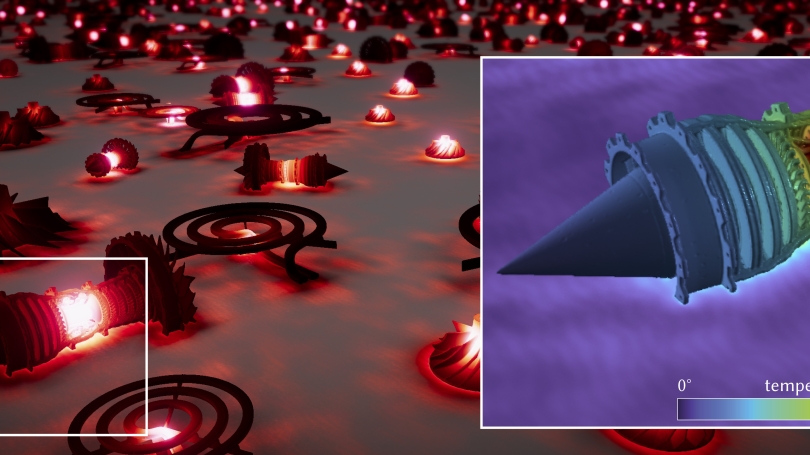

From antenna design to biomolecular simulation, to understanding heat flow in a building, scientists rely on a class of mathematical equations called partial differential equations (PDEs) to model physical phenomena that are at once intricate and dynamic. Simulation techniques allow researchers to analyze physical systems—the variation of temperature across a rocket engine, for example—without the cost and safety concerns of physical experiments. The catch is that the PDEs for complex real-world phenomena are notoriously difficult to solve.

Researchers from the Visual Computing Lab (VCL) and their collaborators at Carnegie Mellon University draw from tried-and-tested techniques used to realistically light up virtual scenes to more accurately simulate complex, real-world PDEs. Their work, which will be presented at SIGGRAPH 2022 in Vancouver later this summer, earned an honorable mention at the conference's first ever Technical Papers Awards.

"It turns out that many of the techniques we have developed for rendering realistic images of virtual scenes have ideas that can be applied to simulating other physical processes, like how heat transfers between surfaces," says Wojciech Jarosz, associate professor of computer science. His expertise involves rendering scenes that have clouds or fog. The way heat disperses through a medium, setting molecules in the vicinity in motion as it dissipates outwards, is a lot like what happens when a flashlight is placed inside a cloud, says Jarosz.

There are many computational methods to find approximate numerical solutions to PDEs. A common approach is to dice up the scene into small "finite elements" within which physical parameters are assumed to change more simply, so solutions can be found. This approach breaks down when the quantity you are interested in has high spatial variation. It is also hard to implement for complex geometries—a rocket engine, for instance.

"The computer graphics community was in a similar situation 20-30 years ago," says Jarosz. "It was possible to simulate how light bounces around in a scene to produce remarkably photorealistic images, but only for simple scenes, since you needed to dice up the surfaces into tiny elements. Using it to render the complex scenes we see in movies was completely out of the question."

A rapid evolution in rendering techniques in the last decade or two has transformed the field, enabling animators to bring clouds and many other complex scenes to life on screen. So-called Monte Carlo techniques, which now dominate the field, do not need to partition the scene into simpler elements. Instead, they simulate light by looking at the random paths photons take from light sources as they move through the scene before arriving at a camera sensor (or our eyes).

"The same techniques can be applied to model heat," says Dario Seyb, Guarini '23, a co-author of the SIGGRAPH paper. "To visualize the idea, imagine pollen dispersing from a point inside an empty box," Seyb says. The pollen would float through the air landing at different points on the surface; some points would have more pollen than others. And this pattern would change if there was airflow within the box.

"It turns out that you can describe the heat arriving at a certain point by looking at where tiny, simulated particles end up on surfaces in your scene," he says. They use a fast algorithm called "walk on spheres" to simulate where the particles would end up.

In their SIGGRAPH paper, they extend this method to account for spatially varying material properties that affect the physical process. A simple example is a rod made of many metals. Heat would conduct at different rates across different parts of the rod based on its composition.

To demonstrate the effectiveness of their technique, the researchers model the heat distribution for a virtual scene populated with a plane of infinite heat radiators with a complex geometry, composed of composite materials. "Accounting for spatially varying material properties is essential for capturing the rich behavior of the world around us," says Seyb. "Even a single wall in a building contains layers of many different materials that together influence how that wall responds to heat or sound."

Other rendering techniques can be imported to algorithms that solve PDEs too, says Jarosz. In another publication, VCL researchers Yang Qi, Guarini '22, Benedikt Bitterli, Guarini '21, Seyb and Jarosz showed how to adapt so-called "bidirectional" algorithms from rendering to model heat distribution.

"We create paths of light partially from the camera and partially from the light source and try to create a picture that traces the light going all the way from the light source to the camera," Jarosz explains. Depending on the type of lighting, it is more efficient to render the scene from the observer rather than the light source, says Jarosz. This idea can be applied to the problem of heat diffusion. Their work was recently presented at the Eurographics Symposium on Rendering in Prague earlier this month.

"Computer generated imagery made a seismic shift in scene complexity and realism with the move to Monte Carlo methods," said Jarosz. "Simulations of PDEs could be at the cusp of a similar big leap forward. We've just scratched the surface."